Deepseek R1: A Game-Changer Tested on M1 Mac

DeepSeek is reaching the world with its new R1 model, claiming to be the best AI model – more or less. One aspect that many people forget is its ability to run the distill versions locally. So, I tried running the new LLM on my M1 MacBook Air.

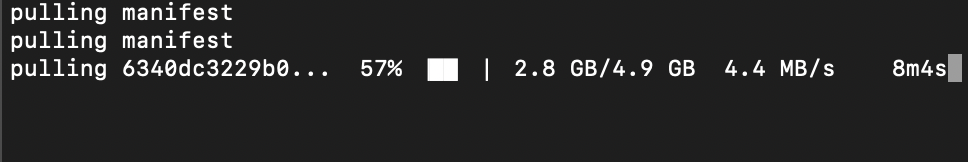

To install the AI model, I used Ollama, which is a command-line tool for LLMs.

First, I installed deepseek-r1:1.5b, the smallest version. The installation was quick, but after entering an English prompt, the response included some Chinese characters. When I switched to German, the AI replied with a mix of German, English, and Chinese. However, in the next conversations, none of this strange behavior occurred. The 1.5b model (1.1GB) is impressively fast and sufficient for debugging small scripts.

Next, I installed deepseek-r1:8b for more complex tasks. The 8b model is said to be comparable to OpenAI’s 4.0 Mini but runs locally on an M1 Mac with 16GB of RAM and 7 GPU cores.

The speed is acceptable. A decent 500 word essay took about two minutes. On the 14b it took about 3 minutes.

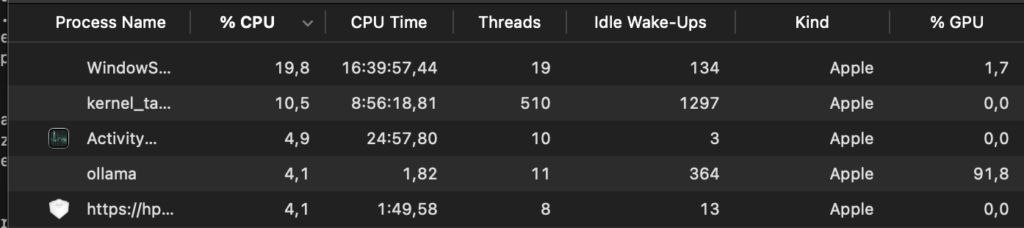

I found out that the model barely uses the CPU but utilizes 90-95% of the GPU. So, it seems to rely heavily on the GPU, and the performance is decent. RAM should be a bottleneck too.

The language capabilities are great. I used it for text correction, and it worked really well.

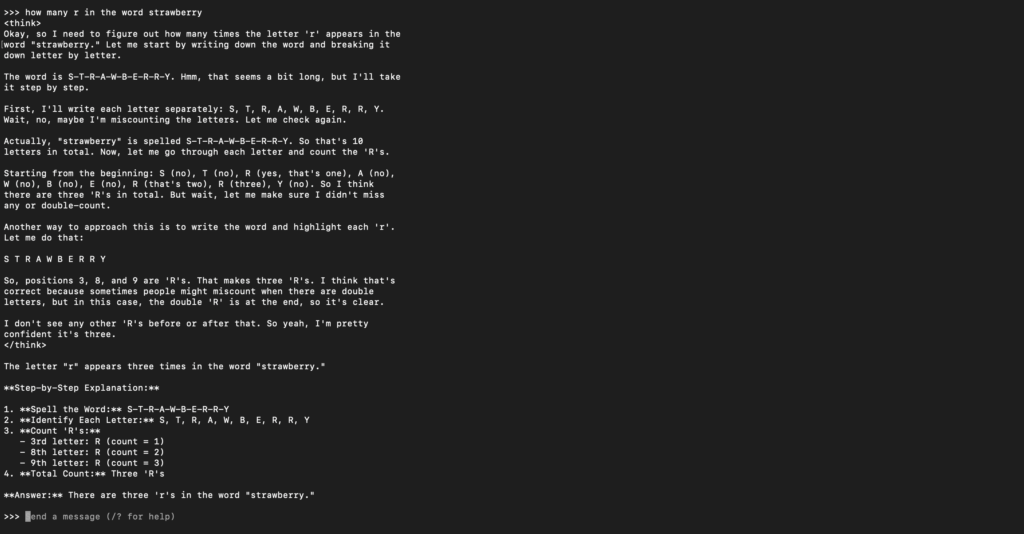

Nevertheless, there are some oddities, like the double “r” in “rasperrry” or the thinking block in general. However, it helps identify mistakes and correct them—easily.

I haven’t tested any larger models than the 14b, because the speed is already satisfactory with the 8b version of R1. A bigger version would likely reduce performance significantly (14b needs 50% longer) or might not even launch the chat window. For an „offline ChatGPT 4.0 Mini“, the 8b model is more than sufficient.

Since ChatGPT and DeepSeek are available online, I think I won’t use the local version that often. Still, it’s good to know that a lightweight offline version runs even on the weakest M1 with 16GB of RAM.

I also tried CS:GO on the M1

Why the Cost of AI Keeps Magically Going Down?

sagt:[…] The same thing applies to Google’s and Anthropic’s models. Open-source models—ahem, DeepSeek—are getting so efficient that what once required a server farm the size of Luxembourg (not much, but still) now runs locally on a MacBook. […]